Introduction#

Welcome to another writeup from a challenge discovered at one of the best learning platforms for hackers HackTheBox. This challenge is part of the Getting Started module and can be found here. It is an excellent starting point for those interested in offensive security or red teaming.

Disclaimer: The content presented in this article is for educational purposes only and does not endorse or encourage any form of unauthorized access or malicious activity.

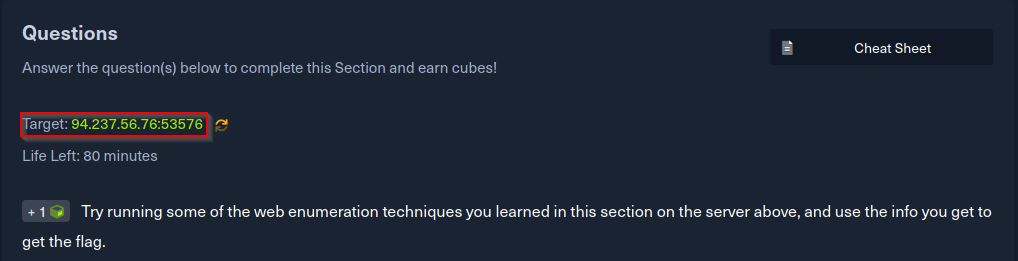

Web Enumeration Challenge.#

Observations & Findings#

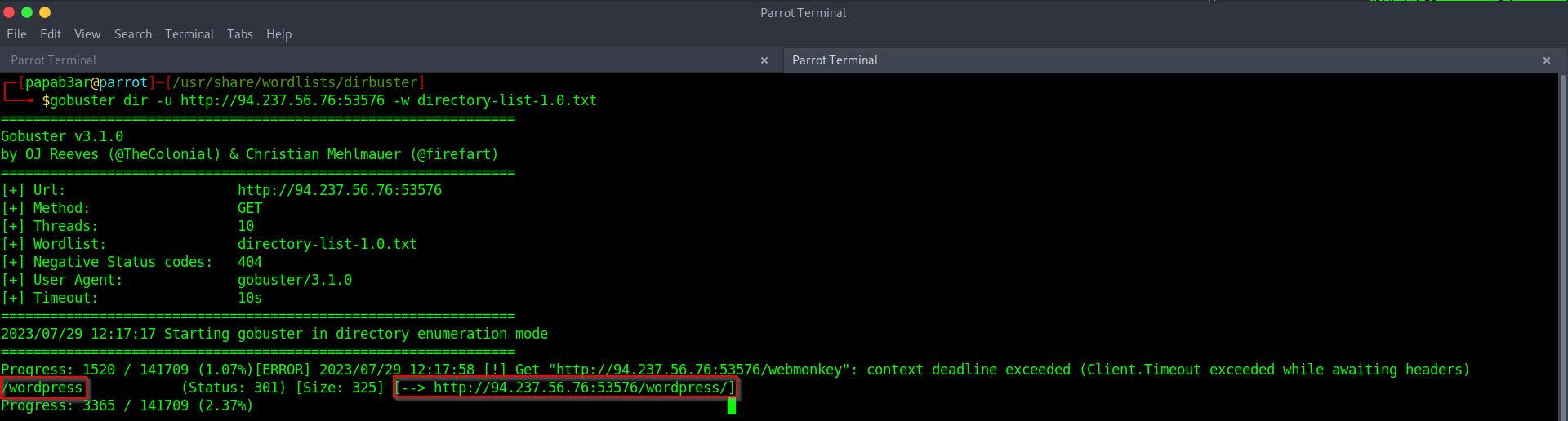

Upon first inspection, there wasn’t much information visible on the webpage. My next step was to inspect the page’s underlying code, which is often a good starting point for such challenges. Unfortunately, even after inspecting the code, I didn’t find anything substantial. So, I decided to leverage the tool gobuster to perform a brute-force attack on the website and find other directories.

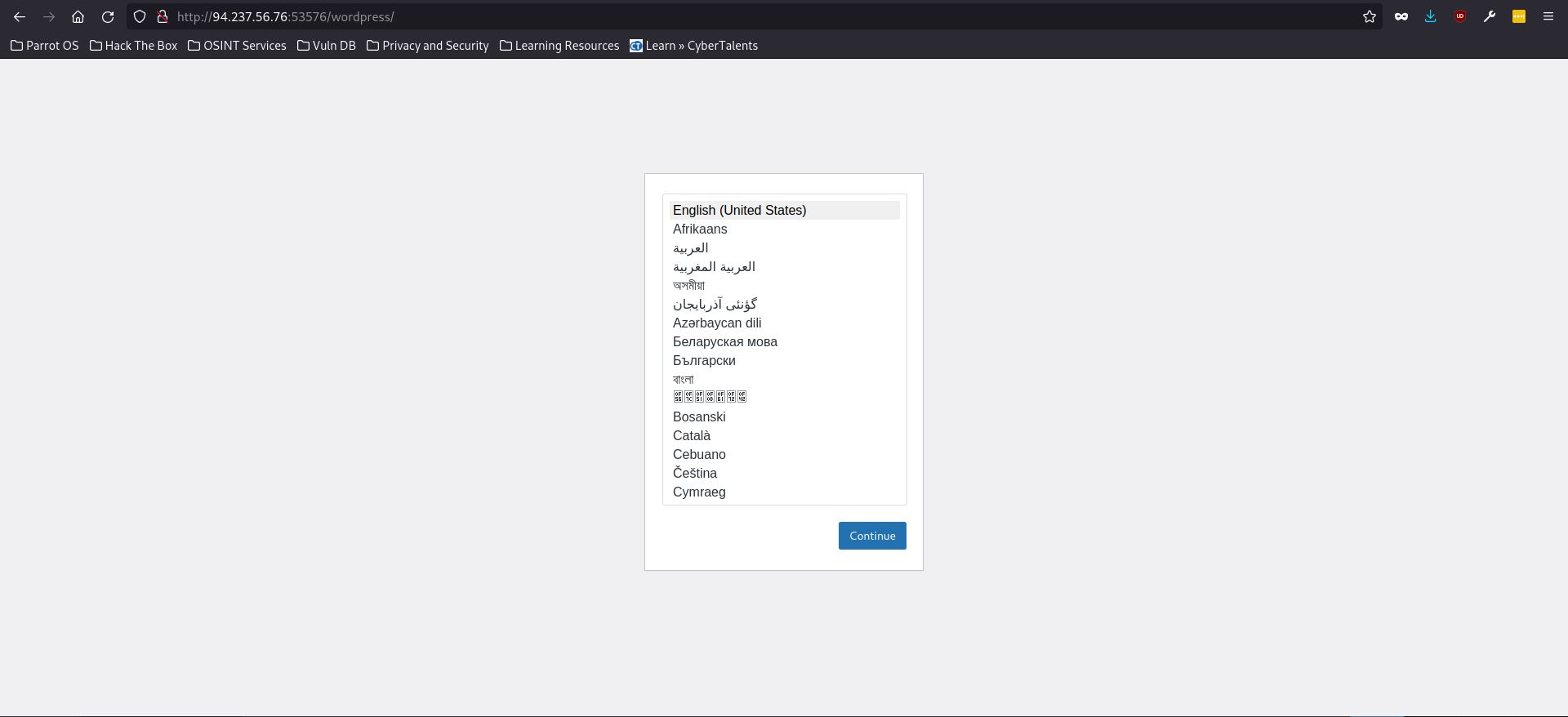

This approach paid off as I discovered a directory. Navigating this directory revealed a page that appeared to be under construction, possibly an incomplete WordPress site.

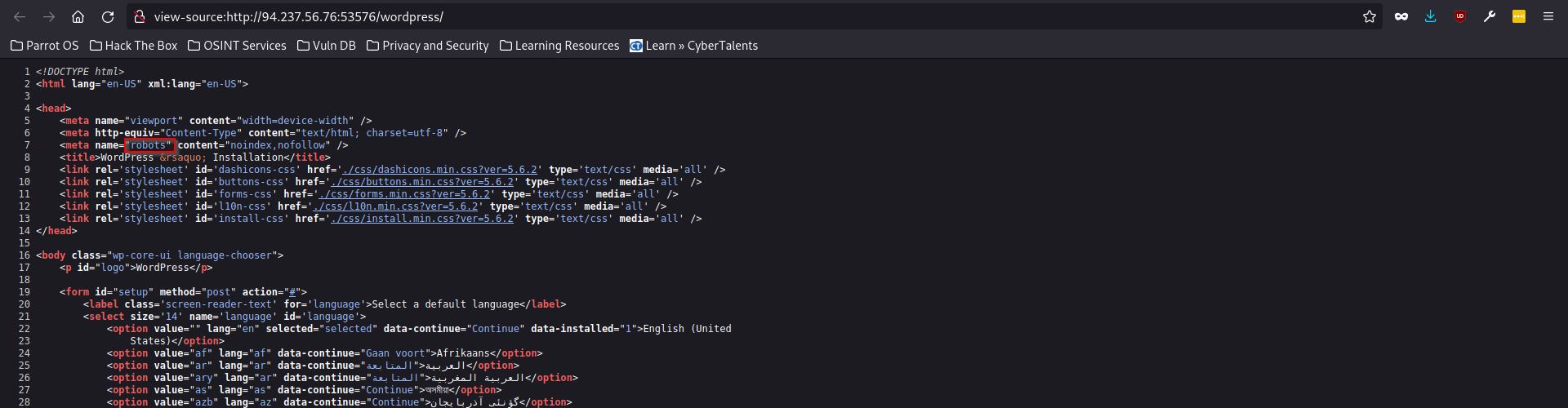

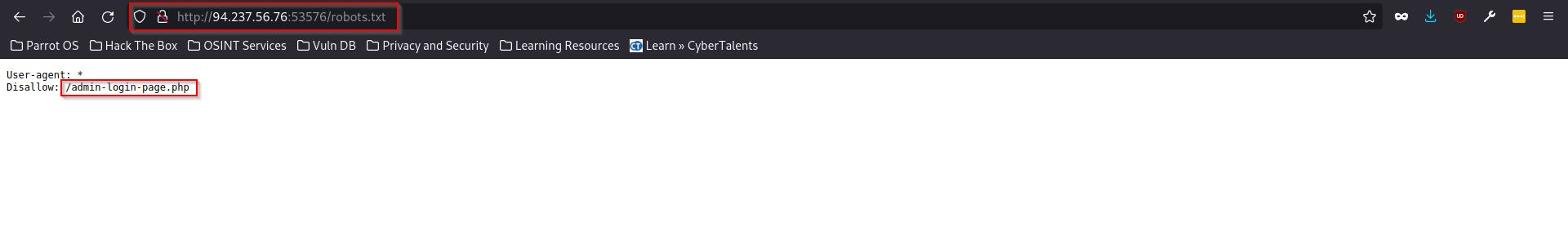

Once again, I decided to inspect the page’s code, hoping to find something useful. This time, I stumbled upon a section related to web crawlers. Web crawlers, also known as spiders or spiderbots, systematically browse the World Wide Web on behalf of search engines for indexing purposes. Websites often use a file called robots.txt to instruct or block these crawlers from accessing certain web directories.

Armed with this knowledge, I attempted to access the robots.txt file to view its contents.

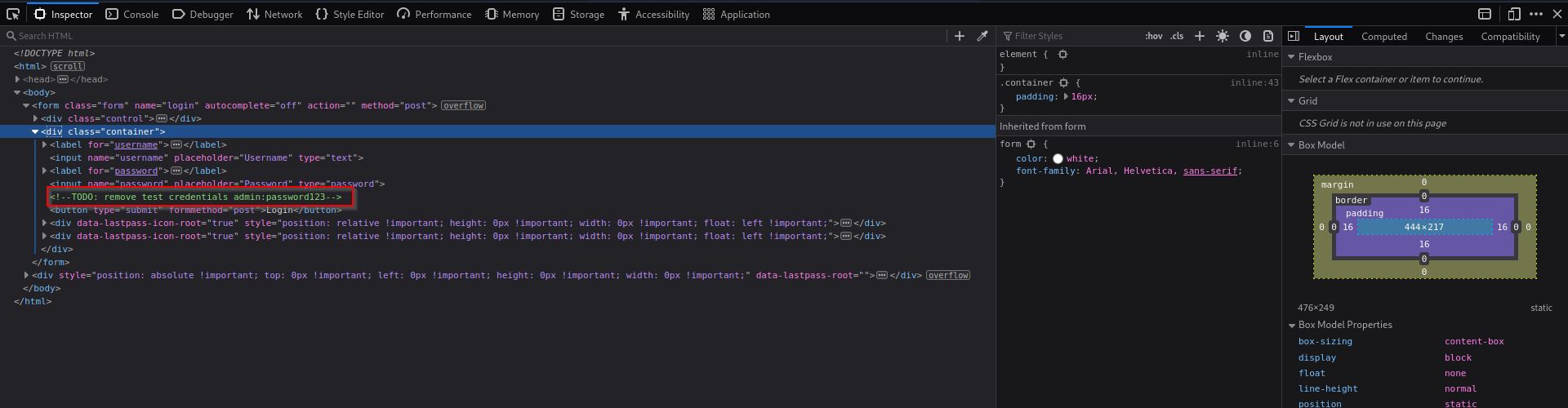

Once again, I inspected the code of this page and came across some interesting comments. It seemed that the web developer forgot to remove the comments containing login credentials for the admin user.

Page code inspection follows:

Solution/Flag#

Using the credentials found in the comments, I successfully logged in as an admin user, which led me to a new page containing the flag for the challenge.

Conclusion#

In conclusion, this challenge provided a great opportunity to apply web enumeration techniques to uncover hidden information and identify potential vulnerabilities. The process involved inspecting the underlying code, using gobuster for directory enumeration, understanding the significance of robots.txt files, and recognizing the importance of secure coding practices.

As a hacker, it’s crucial to continuously explore and learn new techniques. Happy hacking, and never stop learning!